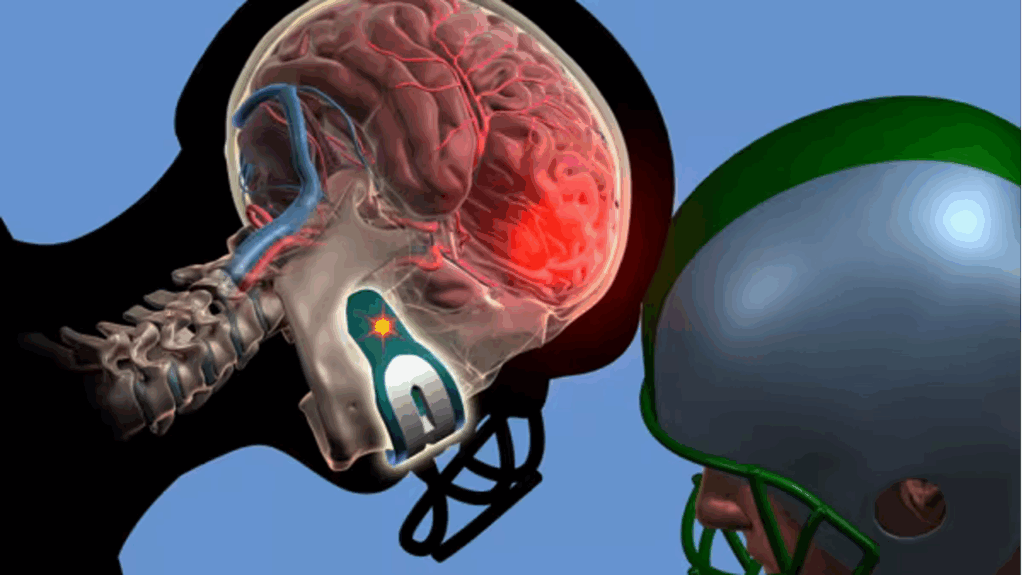

In recent years, instrumented mouthguards – mouthguards fitted with tiny accelerometers – have been introduced as a new way to measure head impacts in sports. The idea sounds simple: every time an athlete takes a hit, the mouthguard tracks how the head moves giving trainers and doctors more information about concussion risk. However, a new study published in the Annals of Biomedical Engineering titled, “Instrumented Mouthguard Decoupling Affects Measured Head Kinematic Accuracy,” raises an important red flag: these devices may not be as reliable as we think if they don’t fit tightly in the mouth.

The study shows that even small gaps between a mouthguard and teeth can create major errors in data. In other words, the problem isn’t the sensor, it’s whether the mouthguard stays perfectly in place when impact happens, which can be unpredictable and hard to control.

Why This Matters

When it comes to measuring impact via a sensor in the mouth, accuracy is critical. Data that underestimates or overestimates the severity of a hit could make the difference between pulling an athlete off the field – or not. For athletes, parents, and medical providers, this study is a reminder that technology still has limits. Tools like instrumented mouthguards can provide helpful insights, but only if they are tested carefully and used alongside other objective measures—never as the sole decision-maker.

Key Findings from the Study

- Fit is Critical: Even a small gap between the teeth and mouthguard (as little as 1–2 millimeters) made the recorded accelerations much less accurate. The looser the fit, the more errors appeared.

- Frontal Hits Are Most Affected: Impacts to the front of the head produced far greater measurement errors than impacts from the rear. This is especially concerning since frontal hits are common in contact sports.

- Surface Type Matters: Padded impacts (like hitting a helmet or cushioned surface) caused more errors than hard, rigid impacts.

- Errors Leave a Signature: When a mouthguard slips or shifts during impact, the data appears differently. The study showed that these faulty readings have unique “noise patterns,” meaning researchers can sometimes detect when a reading is unreliable and need to further assess the athlete.

What This Means for Concussion Care

This research doesn’t mean instrumented mouthguards should be abandoned. Instead, it highlights how important fit, testing, and validation are before relying on them for real-world decision-making.

At Oculogica, we believe concussion care must combine evidence with patient-centered insight. EyeBOX, our FDA-cleared eye-tracking test, decreases guesswork by giving providers objective data after a head injury. Studies like this one remind us why having reliable, validated, tools is important: the more accurate and objective the information, the better the care we can deliver.